Stonks Only Go Up: Building a WallStreetBets Sentiment Model

What I Wish Knew Building A Full-Stack ML System

Over the past few years, I’ve spent a majority of my time trying to learn more about ML. From learning about how to approach a problem to MLOps, my projects (and mentors) have been incredible resources in taking me from a noob to a baseline level of competence as a machine learning engineer.

There are many tutorials and classes (my favorite being CS231N) that delve into the fundamentals of machine learning, a necessity for any later research/serious application of ML. Additionally, there are millions (~80M!) of “Hello World” versions of machine learning, many of which use a framework to build a model to classify handwritten digits. However, I found that the content diving into building a solution to a business problem relatively sparse (or at least from what I was searching). So here’s my attempt at filling in the gap between basic blogs and research papers on arXiv.

This article (and possibly others) will cover some work I did with TopStonks, how we approached a ML problem end to end, and things I learned along the way.

WTF is TopStonks

TopStonks aggregates “The best advice from the worst investors on the internet”. At a high level, they utilize data from financial communities (e.g. r/wallstreetbets) and turn that into meaningful insights. They have been featured in major financial publications including the Wall Street Journal, Business Insider and Forbes.

Working with an accomplished friend, I was lucky enough to get access to this data and learn from him about how to approach problems like this.

Deriving Sentiment

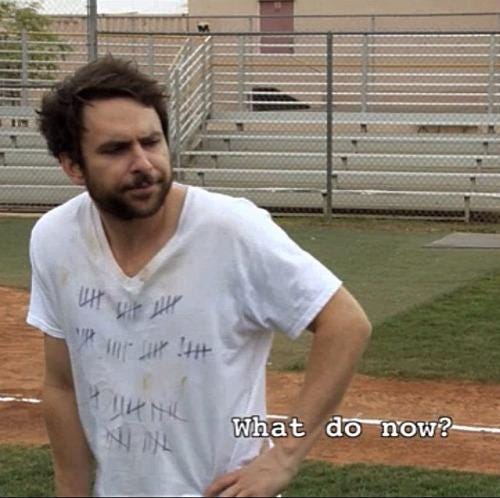

Once you have all this data, the question then becomes what do you do with it? Theoretically (and I’m sure some people do), you can parse every comment to find some alpha. But this is not scalable, unless your day job is to just read Reddit threads.

Ok, what next? You could apply some hard rules and search for terms like "🚀" or "to the moon 🌙 " to score a rough sentiment, but that only gets you so far. These rules only provide a small picture of the nuance of language, but learning that nuance is not easy!

For example, how would you write rules to classify these comments? Not so easy!

SPCE is already printing tendies and continuing to go up as I type thisMSFT and BABA both shitting the bed, my spy calls didnt get filled this morning, my V calls didnt get filled last minute last night (getting some REAL fomo there). what do i buy now :( ?This is where we can use machine learning to determine the sentiment of a comment, i.e. how bullish is an investor. We have the data and we have a baseline for a rules-based approach, but it’s not good enough.

Looking At Your Data

One thing that gets lost in many of the tutorials and classes is that data in the real world is USUALLY messy1. At the very least, there were some nuanced choices that were made, so you better be sure you know exactly what you’re working with.

Data is the most important part of the process and if you don’t take time to understand the ins-and-outs, your model will suffer. Your model will ONLY be as good as the data you are working with. When working with my friend, I was truly surprised to see that the model development cycle consisted of 75% working with data2 (exploratory data analysis, labeling, and cleaning) to about ~25% model building and tuning. His attention to detail on cleaning and labeling data was surprising; I assumed most people just did some basic EDA. Most of our first few weeks were spent diving into the data trying to answer:

What macro things are people talking about? Are themes local to the thread or are they shared across threads?

Are there comments we can omit? Is there spam? What does it look like?

Are stocks talked about in different ways? Are there stock-specific verbiages seen across a longer time period (e.g. on the order of months)?

Without going through each comment, we would have missed out on so much. We could have thrown the kitchen sink at the problem, but eventually this time would have been spent checking out why your model (most likely) sucks.

If anyone learns anything at all, it’s to spend more time with your data. Get into the weeds and understand as much as you can about what you are working with. It will especially pay off when looking at pitfalls of the model. Granted, it’s easier to look into the data when it’s human understandable and not something that requires some domain expertise like genomic data.

Data Label Like Your Model Depends on It

So, your data journey begins with millions of unlabelled comments.

Label the data! This takes time but is important as you start to understand what’s confusing and add context to the problem.

Although tedious, attention to detail is paramount. No one will (usually) put more effort and care than you about the boring stuff. This is something I learned the hard way, as early iterations of our model stunk, primarily due to my lack of attention and poor data quality.

The Fun Part: Modeling

We now needed to see what a naive approach would yield, then iterate on it. And sometimes the simplest approach produces a 6/10 or 7/10 product and small hacks can take it to the next level.

Nevertheless, we wrote some basic rules on what we thought were strong signals of a bullish/bearish comment. One such case being "X to the moon 🚀 ". These rules are an example of a high precision low recall classifiers: they’re accurate when a rule matches a comment, but otherwise (which is most of the time) has no opinion.

Ok, so we wanted our models to be more sensitive to the actual text and content, but we don’t have enough labeled data to train a model sentiment model from scratch. What should we do? Utilize existing models!

Thankfully, HuggingFace has many binary sentiment classification models trained on similar data, like movie reviews.

Evaluating these models proved that they were better than a pure rules-based approach, but failed to fit well to some edge cases specific to the data. The datasets that these HuggingFace models were trained on has some differences to Reddit comments, something I liked to term “meme-speak”. Things like

Tsla puts, musk is mole person. #DD

would never be found on a Yelp Review or a Rotten Tomato Movie Review.

We can even see how inaccurate the model scores out of distribution data. For example, the textattack/bert-base-uncased-rotten-tomatoes model scores the following comments as incredibly bearish:

SPCE to the moon

STONKS only go up

SPCE is already printing tendies and continuing to go up as I type this

HOLD THE LINE BULLS 🐂🐂🐂when it’s clear these are bullish comments.

At a Crossroads

We now have a bunch of pretrained models trained on tangential data. We also have hand-written rules that are highly accurate when they do appear, which is not often. How can we build a model combining all of these different signals?

Snorkel

Snorkel! Snorkel allows users to create rules and learns a weighting to best approximate the true labels. Instead of having to choose one model or a set of rules, we could combine them into an ensemble!

Writing these rules was no easy task however. We spent a lot of time iterating on what to include in the functions, mainly thinking about:

How many pretrained models do we need? Is there a number of models that provide diminishing returns?

What are key words that are easy predictors of sentiment?

Does comment length matter?

A few example functions:

@labeling_function

def fed(text):

phrases = ['fed',' powell']

return BULLISH if contains_phrase(text, phrases) else ABSTAIN

@labeling_function

def hold(text):

phrases = ['holding', 'hold', 'holder', 'hodl', 'diamond hand', 'diamond hands']

return BULLISH if contains_phrase(text, phrases) else ABSTAIN

At the end of the day, we came up with an ensemble model using ~50 rules and ~5 pretrained models. We could now more accurately predict sentences such as

GME to the moon!

or even more complex comments like

Earnings coming up , I think they’ll do really well and surpass earnings. They’re also down from their ATH which imo will shoot up the price midway from now to ATH. Everyone even boomers want to cut the cord and buy a roku stick, expected sales on tvs with roku built it surged during the holidays, and they’re making a significant money from ads from their free platform. Idk. My opinion, change...

Caveats

However, the model was not perfect. For one, not all comments are either bullish or bearish. Some are neutral or exist on a spectrum:

Yeah I’m in the same boat. Apple and MSFT trading at record highs and high PE? I’m 30 years from retirement...I’ll buy on the way up, on the way down, and sideways for the next 5 years at least. Might dump more $$ on large corrections...but I’ll DCA for the next 5-10 years and worry about it when I’m 60. I’m most confident in those 2 names specifically then any other.

How do you model a comment like this where they are (somewhat) bearish/nervous in the short term but bullish long term? Do you add more categories or do you (somehow) give a discrete value to the comment?

In general, the model was more well-suited to short-comments with “meme-speak” sprinkled in. It tended to be extremely confident on comments with phrases like stonks only go up but less certain on other comments. Given more time and resources, my friend and I would have loved to look more into predicting emotions based on Plutchik’s Wheel of Emotions, but would have taken serious effort to crowdsource the labels.

We then tried using weak supervision to try and improve the model. Using the confident outputs as ground truth, and also labelling a few thousand more, we fine-tuned a Large Language Model (LLM) with little improvement.

Active Learning Attempts

Trying to improve the fine-tuned LLM, we also tried using active learning. Active Learning is a technique to try and select the most beneficial examples to label. Put another way, what examples do I need to label to most improve my model? Figuring out which examples to choose is difficult however. One standard way to choose important example is using a model’s uncertainty (calculated by entropy). Even still, we found little to no improvement over our ensemble.

Wrapping Up

As with any project, there are many things you wish you had time to keep trying and figure out. Even with this (already too long) article.

This project really improved my understanding of applied ML and how to approach most problems. I am eternally grateful to my friend for teaching me a smol portion of how to be effective at building useful ML products and wanted to share a slice of what I have learned.

At least in my experience, n=1

For a more detailed how-to-train a NN, see Andrej Karpathy’s A Recipe for Training Neural Networks